Oftentimes, organizations that are just starting their HITRUST journey will ask what they need to score in order to be HITRUST certified and just how that scoring process works. This is a complex question, and one that needs to be taken in multiple steps.

At a very high level, an organization needs an average PRISMA score of 3 or higher in each of the 19 Assessment Domains that the Requirement Statements are spread across. HITRUST CSF consists of the following Assessment Domains:

|

1. Information Protection Program |

11. Access Control |

|

2. Endpoint Protection |

12. Audit Logging & Monitoring |

|

3. Portable Media Security |

13. Education, Training & Awareness |

|

4. Mobile Device Security |

14. Third-Party Assurance |

|

5. Wireless Security |

15. Incident Management |

|

6. Configuration Management |

16. Business Continuity & Disaster Recovery |

|

7. Vulnerability Management |

17. Risk Management |

|

8. Network Protection |

18. Physical & Environmental Security |

|

9. Transmission Protection |

19. Data Protection & Privacy |

|

10. Password Management |

|

|

Policy |

15% |

|

Procedure |

20% |

|

Implemented |

40% |

|

Measured |

10% |

|

Managed |

15% |

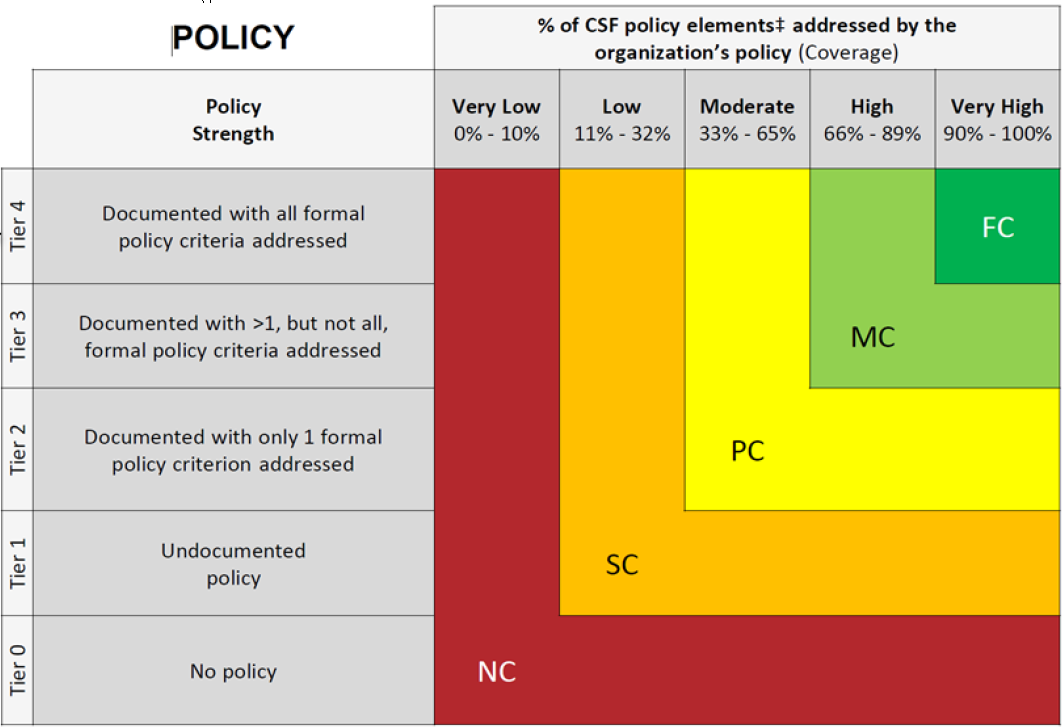

In order to understand how scores are derived you must start with the HITRUST Scoring Rubric which can be found here: https://hitrustalliance.net/content/uploads/HITRUST-CSF-Control-Maturity-Scoring-Rubrics.pdf. Within, there are a lot of considerations regarding each of the unique scoring items in Policy, Procedure, Implemented, Measured, and Managed—doing a deep dive on each would require more space than this blog allows, and as such, let’s just look closer at the first area assessed “Policy” to give some context. The Policy section of the Rubric is as follows:

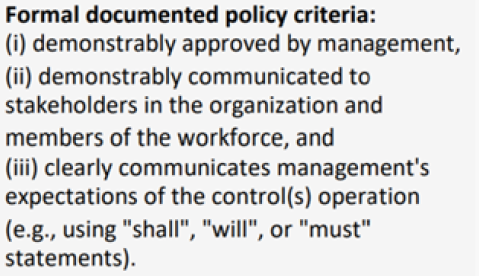

A nice thing about this rubric is that should the user come across any terms under the “Policy Strength” column that they don’t understand, they are defined in the concepts page of the rubric (page 2). On this second page, one can also find specifics on the “policy criteria” being referenced in the same column:

Going back to our example statement—“access to network equipment shall be physically protected”—if all three of the policy criteria are met, then that would grade this out to a tier 4 which equates to the “Very High – 90%-100%” block in the rubric. (Similarly, if only two policy criteria were met, it would fall down into the “High 66% - 89%” percentile, and so on and so forth.)

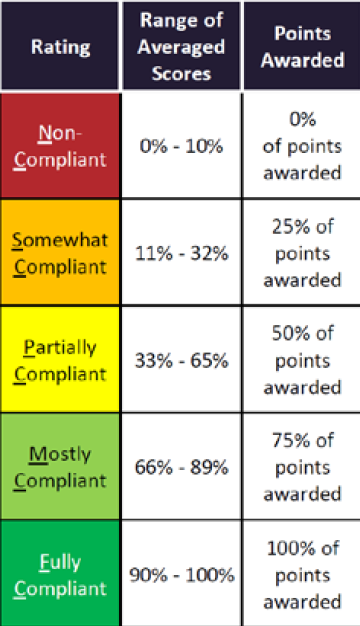

Once this range of scores is determined, the percentile ties further to points awarded that are defined in the scoring legend below:

So again, if all three criteria were addressed the “Range of Averaged Scores” would be 90% - 100%, therefore 100% of the “Points Awarded” would be the Policy score. Remember that Policy was weighted as 15% of the overall score, so in order to determine the true number of points for Policy, the calculation would be to take 15% of those 100 points—in other words, 15 Policy points would go towards the overall points score.

The same process would repeat for Procedure, Implemented, Measured, and Managed, using the scoring rubric definitions for those areas. Theoretically, let’s say that in doing so, the results scored 100% of points awarded for Procedure and Implemented, while 25% of points awarded for Measured and Managed. The following table breaks down how the official score for that Requirement Statement would be calculated:

|

Area |

% Points Awarded |

Weighting |

Points |

|---|---|---|---|

|

Policy |

100 |

15% |

15 |

|

Process |

100 |

10% |

10 |

|

Implemented |

100 |

40% |

40 |

|

Measured |

25 |

10% |

2.5 |

|

Managed |

25 |

15% |

3.75 |

|

Overall Score |

|

71.25 |

|

That’s a total of 71.25 points, but that still doesn’t provide a view in terms of how that equates to a PRISMA score, so how does one get that? There’s one more conversion to be done, this time using the “Score to Rating Conversion” table, which equates the raw score to a PRISMA score.

Below is the Score to Rating Conversion table:

|

Score Greater Than |

PRISMA Score |

|

0 |

1- |

|

9.99 |

1 |

|

18.99 |

1+ |

|

26.99 |

2- |

|

35.99 |

2 |

|

44.99 |

2+ |

|

52.99 |

3- |

|

61.99 |

3 |

|

70.99 |

3+ |

|

78.99 |

4- |

|

82.99 |

4 |

|

86.99 |

4+ |

|

89.99 |

5- |

|

93.99 |

5 |

|

97.99 |

5+ |

For this example that scored 71.25, that would equate to a 3+ according to the conversion. This is just one of many requirement statements under an Assessment Domain. If the same organization continued with 3+ scores for Requirement Statements, they would have no issues in meeting the average score of 3 (raw score of 61.99) required for each Assessment Domain in order to be HITRUST certified.

The number of 3+ is also important, as any Requirement Statement that scores a 3+ or higher does not need to have a Corrective Action Plan (CAP) documented, while any requirement statement that scores less than that is required to have a CAP documented. Such a distinction should be made, as this many times gets confused—an average score in an Assessment Domain of only a 3 is required for certification, while a score of 3+ is necessary to avoid an individual Requirement Statement needing a CAP.

As it does require multiple steps and conversions, the scoring process in HITRUST can definitely be overwhelming, especially when described along with all the other HITRUST certification process details. Sometimes it can help to see visual breakdowns of each step with some general context around HITRUST scoring, how it is derived, and how that then ties to HITRUST certification—hopefully now, after such an analysis, the process seems a little less intimidating.