Frameworks and standards like PCI DSS have been a part of the regulation and compliance industry well before any of us overheard terms like “the cloud” or “containers” at our local coffee shop. And although you will not currently find specific detail regarding cloud-native technologies like containers in the PCI DSS standard, that does not mean your organization can expect any latitude on PCI Compliance just because it is running a containerized environment.

"The cloud migration services market is expected to reach USD 448.34 billion by 2025, at a CAGR of 28.89% over the forecast period 2020 - 2025."

- Cloud Migration Market Report, 2020

"By 2022, more than 75% of global organizations will be running containerized applications in production, up from less than 30% today."

- “Forecast Analysis: Container Management (Software and Services), Worldwide”, Gartner

Cloud-native technologies remain key drivers of the continued surge of this digital transformation age we have become so accustomed to. But they are not only popular with businesses. They also have the attention of bad actors. As detailed in Verizon’s Data Breach Investigation Report (May 2020), 24% of the breaches thus far were around cloud-based assets. As organizations move ahead on their digital transformation journey, inherently, they find themselves with the challenge of also transforming their information security program. Such a security transformation also requires a transformation of how your organization approaches compliance.

Before we go any further, what exactly is a container? Containerization is an increasingly popular technology for running many instances of a server or application. It has resource, security, and isolation properties similar to those of virtual machines, but not the memory and performance overhead inherent in system-level hypervisors. Containers are designed to be deployed as very lightweight, short-lived groups of systems. This provides speed and scalability by increasing the quantity of containers in the group dynamically. Because of this, controls should focus on the image templates and group as an aggregate, not the individual containers within the group.

Security Properties

Containers have unique security properties.

-

Declarative

Contents and all its dependences are explicitly laid out at build time. -

Inspectable

Contents can be statically checked without having to run the container. -

Immutable

Containers cannot be modified once created: no updates, no patches, no configuration changes. If you must update the application code or apply a patch, you build a new image and redeploy it.

The Model

With containers and other cloud-native technologies, we get a new security model.

-

Build from trusted sources

Ensure your container images are built from up-to-date minimal base images, from trusted sources. -

Implement streamlined security checkpoints

Utilize streamlined security checkpoints. For example, implement tests and scans as part of your CI/CD (continuous integration/continuous delivery) pipeline. -

Shift Left for declarative compliance

Maintain a policy-driven release practice by codifying your security requirements as early as possible. Then, be sure controls are in place so that only images that have gone through the necessary validations are admitted.

Shifting left simplifies compliance. By decoupling compliance controls from business logic with a cloud-native architecture, it allows your environment to adapt to evolving compliance requirements.

Detecting and remediating defects in the design phase will also result in faster time to market, and cost savings since you will not have to go back and rebuild once you are already hit the implementation or testing phase. Simply put, you can shift left and declare your compliance outcome as a code. Just be sure you make your QSA or auditor aware at audit time.

The challenges of demonstrating compliance will raise anxiety levels when the “audit bell” rings. When you hear that bell, remember these two key aspects of a PCI DSS assessment:

-

Scope your PCI audit accurately

-

Evidence controls

Scoping is an easy task in a monolith application, with one application server and a backend server running in a single data center. But with container-based architecture, containers are often running in different clouds. Trying to draw a boundary can feel like sweeping sand off the beach. Additionally, different containers in different clouds, illustrating shared responsibilities between you and your cloud providers can induce a headache!

Kubernetes

For the purposes of explanation, we will use the Kubernetes architecture as the basis of a cloud-native ecosystem for this discussion. That said, before we continue with how to demonstrate PCI DSS compliance, let’s quickly break down a few basic concepts.

• Pod

Think of a pod as a container for your containers. A pod is essentially a logical wrapper for a container to execute.

• Node

Nodes, or worker nodes, are the virtual or physical machines in which pods run.

• Cluster

Clusters consist of a set of nodes, that run your containerized applications. Every cluster has at least one node.

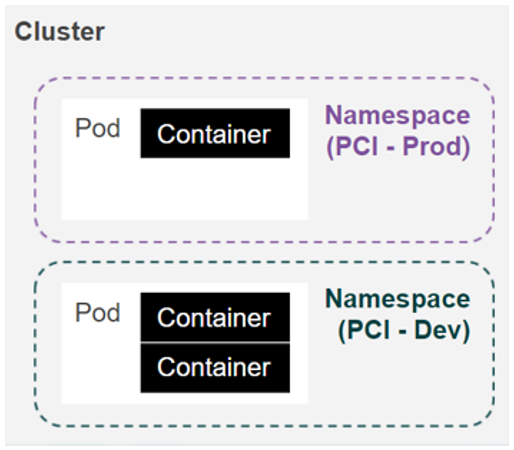

Another important concept to keep in your kit bag is the use of namespaces. A namespace is a virtual cluster backed by the same physical cluster, that serves as a logical border enabling you to divide cluster resources between multiple teams or projects. Simply put, namespaces are a key enabler for isolation in your cloud-native environment. We will revisit the idea of namespaces a little bit later.

Common Compliance Requirements

The first step in your quest to demonstrate PCI DSS compliance requirements is to ask yourself the following questions:

-

Segmentation and Networking

How do we isolate workloads with different risk profiles? Which parts of our environment do we need to make compliant? -

Identity and Access Management

Have we implemented the right access controls? -

Data Security and Encryption

Can we attest that our payment card data is properly secured? -

Continuous Monitoring

How do we detect common vulnerabilities in applications? -

Secure Supply Chain

Are we deploying up-to-date, trusted workloads? -

The Human Factor

Who are the stakeholders?

In the sections below, we will cover each of these common requirements.

Segmentation and Access Management

While the PCI DSS clearing states that segmenting your cardholder data environment from the rest of your network is NOT a requirement, it strongly recommends segmentation with the caveat that the flatter your network, the bigger the portion of it will be in scope for a PCI DSS assessment.

With respect to containers, there are two main types of segmentation.

Macro-segmentation includes actions you can take outside of the cluster. For example:

-

User segmentation (Identity and Access Management)

Enforcing access on a “need to know” or “least privilege” basis. -

Network segmentation

Limiting access from trusted or private networks only. -

Application segmentation

Isolating your “in-scope” and “out-of-scope” applications.

Micro-segmentation is the segmentation you build within the cluster. This includes:

-

Cluster segmentation

Limiting your “in-scope” pods, and ensuring they are appropriately isolated.

The key takeaway is that cloud-native technologies like containers give you the flexibility to build layers of segmentation that best suit your business needs.

Cluster Segmentation

In a cluster, every pod gets its own IP address, scoped to the cluster. Pods can reach each other, even across nodes. To achieve segmentation at the cluster level, you can restrict PCI pod traffic to and from non-PCI traffic by employing network policies to allow or deny traffic based on IP rules.

Namespaces

We are not done with our segmentation mission just yet. Demonstrating the separation of development environments from production environments still has to be addressed.

Namespaces are API objects which partition a single cluster into multiple virtual clusters. They can be created as needed, per user, per application, per department, etc. They provide each user community with its own policies, with delegated management, and resources with consumption limits.

You can use namespaces wherever you need to segment certain application profiles where one application contains PCI data, and another does not.

What if You Need Stronger Isolation?

A service mesh is a dedicated infrastructure layer that simplifies the process of connecting, protecting, and monitoring your microservices. If stronger isolation is something you talk about in your weekly meetings, service mesh can fulfill the need for stronger segmentation of users, networks, and applications.

Service mesh can also provide you with the following benefits:

-

Assist with the implementation of a strong Zero Trust Network.

-

Enable the use of mTLS (mutual TLS) to encrypt service to service calls. With mTLS, the client and server each verify each other’s identities before proceeding on to the HTTP exchange.

Whether you employ tools like service mesh or not, always consider the fundamentals. The cleanest and easiest way to achieve segmentation is to completely separate Kubernetes clusters that house payment card data, from clusters that house non-payment card data.

Data Security and Encryption

Best Practices

Ensuring the adequate protection of your data in a cloud environment can be very challenging and quite complex. With respect to PCI DSS compliance, consider five key best practices to help your organization secure its information assets.

Maintain an Asset Inventory

It is impossible to protect something if you don’t even know where it is. For that reason, it is imperative that you establish and maintain an asset inventory so you can consistently track data across your organization. Additionally, identify your on-prem assets, vs. public cloud assets.

Understand Shared Responsibility

To achieve compliance in the cloud, you must understand where your provider’s responsibility ends, and where yours begins. The answer isn’t always clear-cut, and definitions of the shared responsibility security model can vary between service providers and can change based on whether you are using infrastructure-as-a-service (IaaS) or platform-as-a-service (PaaS).

It is also important to know the different encryption modules your cloud provider provides, and if they are PCI compliant.

Key Management

When it comes to key management, ensure your data encrypting keys (DEKs) are separate from your key encrypting keys (KEKs), and that the encryption for your KEKs is at least as strong as, or stronger than that of your DEKs. For reference, NIST SP 800-57 describes equivalence between different cipher types (e.g., RSA-3072 is equivalent to AES-128).

Set up key rotation policies. Doing so:

-

Provides resiliency to manual rotation.

-

Helps prevent against brute force attacks by limiting the number of messages encrypted with the same key.

-

Limits the number of actual messages vulnerable in the event of a compromise.

Time to Live Mechanisms

Maintaining compliant data retention periods is another challenge in the cloud.

-

Make sure you understand how your cloud provider handles data deletion.

-

After your data is deleted, SHRED THE KEYS to make them unrecoverable.

Don’t Forget About Your Secrets

Passwords and API keys are examples of “secrets” and they need to be encrypted as well. Be sure to check your default settings. For example, by default, all Kubernetes secrets are base64 encoded and stored in etcd. To clarify, Base64 is NOT encryption.

Encoding substitutes one meaningful word or phrase for another. "I acknowledge your message” is often encoded as “10-4 good buddy”. Encryption, on the other hand, uses mathematical algorithms to encrypt and decrypt messages. Bottomline, anyone with access to the etcd cluster also has access to all your Kubernetes secrets, in PLAINTEXT.

Continuous Monitoring

For PCI DSS, there are specific things to log and monitor with your runtime security.

-

Events must be traceable back to the actual user.

-

Security events must be monitored.

-

Logs must contain details such as user, event, date, and time.

The good thing is that a significant amount of the logs you will use with your cloud provider, are the same that you will use for your cloud-native technologies. However, it is important to take the time to understand the logging processes of your cloud-native technologies so you can implement the appropriate log policies.

For example, Kubernetes audit logs show actions taken in Kubernetes and generate multiple points in an audit record lifecycle. They give the cluster administrator the knowledge of the 7 W’s:

-

What happened

-

When it happened

-

Who initiated it

-

Where it was initiated from

-

On what it happened

-

Where it was observed

-

Where it was going

Kubernetes offers four different audit log levels.

-

None: Nothing is logged.

-

Metadata: Logs request metadata (requesting user, timestamp, resource, and a verb). This is a good choice for operations where the verb is enough to tell you what had happened.

-

Request: Logs metadata and your request body.

-

RequestResponse: This is for more verbose logs and includes the event metadata, request body, and the request body response.

The Secure Software Supply Chain

The software supply chain is the last common compliance requirement we are going to cover. Setting up a CI/CD pipeline enables your development team to speed up your release lifecycle and deliver more reliable code changes. CI/CD automates the deployment steps, so your team’s focus can be on business requirements, code quality, and security.

There are six stages to ensure you are deploying up-to-date trusted workloads.

Stage 1 - Code: Developers commit the code and push to git.

Stage 2 - Build: Your CI/CD pipeline is triggered and begins running the new code through the pipeline. An image is built.

Stage 3 - Test: Container image is tested to run.

Stage 4 - Scan: As containers are pushed the registry, they may be scanned for known security vulnerabilities and as required by PCI DSS, are categorized/ranked, and pushed to a structured image knowledge base.

Stage 5 - Deploy: Open source tools can be utilized to create policy as code, for example, anything with a higher medium CDE ranking is not allowed to be deployed into production.

Stage 6 – Run: The new image is implemented; the old image is deleted.

Your containers may interact with other parts of the infrastructure when processing payment card data. For example, data may be stored in a separate cloud-based database service. Additionally, serverless functions could be in place to help process payments. Whatever you do, for any part of the infrastructure that your containers interact with when handling payment card data, make sure that you are securing connections appropriately, and creating compliance reports. In other words, do NOT overlook the fact that PCI DSS compliance requirements don’t end with your containers themselves.

We cannot forget the people side of compliance. In some cases, it can be the hardest part!

There are three main stakeholders to consider when running regulated workloads: the makers, the internal assessors, and the external assessors. Regardless of the category you may fall under, it is very important to understand your counterpart’s point of view. Here are some essential considerations regarding these PCI DSS compliance stakeholders.

Makers

If you are working on a cloud-native application that must meet PCI requirements, you can count on working with the makers on a day to day basis. Makers are your developers, product owners, and enterprise architects. Makers should:

-

Be an advocate for the compliance team by knowing and explaining the PCI DSS controls they need to meet.

-

Understand how having clear documentation and diagrams will pay off dividends in the end with their auditors.

-

Explain how the git workflow can be used as an audit trail later.

Internal Assessors

Internal assessors are composed of your Security, Risk and Compliance, and Legal teams.

-

Internal assessors are often less likely to understand cloud-native technologies

-

Do not leave your empathy at home! Understand you all work for the same company, and ultimately share the same goals.

-

Make them feel like part of the team. Internal assessors should be invited early and often to the developer stand-ups, architecture reviews, or other means of collaboration. You will also find it very useful to include them in events like learning sessions and brown bags.

-

In many cases, it is also very helpful to schedule check-ins with your legal teams to discuss documentation and/or diagrams.

External Assessors

External assessors probably are not familiar with your applications. Unlike internal assessors, they do not share the same goal as your internal teams. If you are a maker, all the “empathy practice” you have under your belt with the internal assessors, use it with the external assessors as well.

-

Recognize they are here to help you pass your audit.

-

Keep in mind QSA’s audit a vast array of different systems from mainframes to Cloud-Native technologies.

-

Understand where your auditor is coming from and that they probably have a desire to understand and learn new technologies. Teach people what you know!

-

Take the extra time to explain the concepts (or hopefully involving your security and GRC teams paid off and they can take this off your plate).

Processes and Advocacy

From a compliance standpoint, it doesn’t matter how cutting edge or locked down your applications or cloud is, if you cannot articulate it to your external assessors.

Diagrams and documentation are crucial when working with auditors as they set the stage for your compliance story. Here are some tips:

-

Start simple, for example with segmentation.

-

Drill deeper into the next diagram.

-

Drill deeper into the next diagram, and so on.

-

Do not be afraid to have too many diagrams!

PCI DSS requires a ‘High-level Network Diagram’, a ‘Detailed Network Diagram’, and ‘Dataflow Diagram(s)’, but by adding supplemental diagrams you can significantly improve the audit process.

Documentation should be used to support your diagrams, as well as to supplement your policies and procedures, as necessary. It can come in many forms such as confluence pages, a wiki, other collaboration tools, etc.

The key thing to remember is BE PREPARED TO TELL YOUR COMPLIANCE STORY! Otherwise, you may find yourself on a search and rescue mission for additional evidence to give your auditor.

“If you think compliance is expensive – try non-compliance.”

- Former U.S. Deputy Attorney General Paul McNutty

When it comes to PCI DSS, determining compliance of the use of cloud-native technologies is challenging for both its users and assessors, especially since the requirements are not specific to containers. However, combined with the proper dose of advocacy and empathy, detailed documentation, automation (Infrastructure as Code and Policy as Code), and a clear understanding of the shared responsibility model, such technologies are much less of a hurdle when it comes to painting your PCI DSS compliance picture.

The PCI SSC 2020 North America Community Meeting, Making PCI Compliance Cloud-Native:

Travis Powell, Director, Training Programs, PCI Security Standards Council; Zeal Somani, Security and Compliance Specialist, Google; Ann Wallace, Security Solutions Manager, Google

PCI SSC Cloud Computing Guidelines – Information Supplement:

https://www.pcisecuritystandards.org/pdfs/PCI_SSC_Cloud_Guidelines_v3.pdf

2020 Verizon Data Breach Investigations Report (DBIR):

https://enterprise.verizon.com/resources/reports/dbir/

Kubernetes documentation:

https://kubernetes.io/docs/home/

Gartner Newsroom:

Shared Responsibility Model:

https://cloudsecurityalliance.org/blog/2020/08/26/shared-responsibility-model-explained/

Cloud Migration Market - Growth, Trends, Forecasts (2020 - 2025):

https://www.mordorintelligence.com/industry-reports/cloud-migration-services-market