Docker and PCI Compliance

Published: Mar 22, 2017

Last Updated: Nov 9, 2023

Executive Summary

Docker is an advanced framework for deploying applications--in particular, cloud applications. It is notably different than working within traditional virtualization environments, and/or “standard” image-based cloud deployments at Amazon or Microsoft. With that comes opportunity for deployment engineers, but also challenges for security and compliance professionals. This post provides you with some perspective on technical architecture for Docker and specific use cases for configuring Docker containers for PCI compliance. Where I could, I provide screenshots and examples for a test Docker environment created for this purpose.

What is Docker?

It is easiest to think about Docker in terms of shipping--almost like they named it because of that! Today’s applications often require custom server configurations and very specific software package versions to function properly. This becomes a nightmare if you must re-build or build a new application server, or if you want to move your application into the cloud (e.g., AWS, Digital Ocean). Even internally, making sure that your testing/staging environments mirror production is important because if those servers aren’t configured the same way, an application which functions fine in testing may not in production. Docker aims to solve the problems of portability and dependency management by wrapping the application into a “container,” much like real shipping containers. In reality, shipping containers have standard dimensions and hook-ups and can fit equally well onto cargo ships, rail cars, and trucks. This makes for easy portability. Back in the virtual world, Docker’s container is in a self-contained unit that includes a stripped-down operating system (e.g., Ubuntu, CentOS), all the application packages, libraries, and dependencies inside the container. Now when you want to move an application into production or to a cloud service provider, it is as simple as pulling the container down and running it wherever needed on any system running the Docker-engine.

Images vs. Containers

Before digging deeper into Docker, it is important to understand these two fundamental Docker concepts.

- Image: A container image is the base from which containers are launched. It is an immutable snapshot of a container and is created using the “build” command, or by “pulling” an image from a container registry. As noted below, this is slightly different than a virtual image, which was defined by VMware and utilized in a cloud environment, such as AWS.

- Container: When you use the Docker “run” command, you are creating an instance of an image which is a container. You can launch essentially unlimited containers from the same image. Any containers which you launch can be altered however you please, but those changes only affect that container instance, not the image it was launched from. For example, if you launch a container from an image called “base” and install vim on it, then launch another container from the “base” image, vim will not be installed on that second container. That being said, if you want to commit your running container instance to its own image, you use the “commit” command.

How does Docker work under the hood?

There are a lot of misconceptions around containers. The most common is that a Docker container is equivalent to a virtual machine (VM). Though to the end-user, a container is functionally very similar to a VM, there is nothing virtualized in a Docker container. A true virtual machine virtualizes everything from the hardware level up, while a container shares the same operating system (read: kernel, which is why Docker only works on Linux; while it is supported on Windows and Mac, on those systems, the Docker-engine runs a virtual Linux machine to host the engine). Docker uses underlying Linux technologies to isolate the container from other containers running on the host and the host itself.

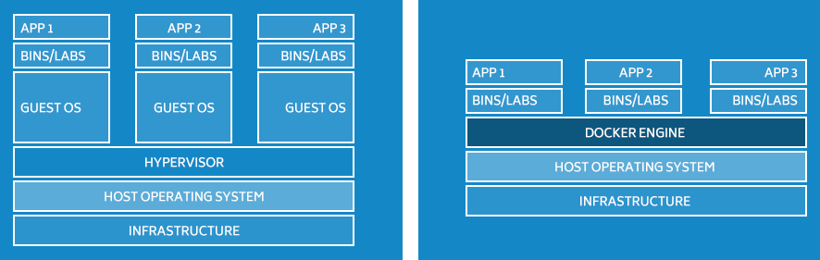

I think the Docker website explains it best with this graphic. A representation of a virtual machine is on the left and a Docker container is on the right.

Source: https://www.docker.com/what-docker

Source: https://www.docker.com/what-docker

As you can see, the Docker-engine itself acts as an orchestration layer for two main Linux technologies; namespaces and control groups.

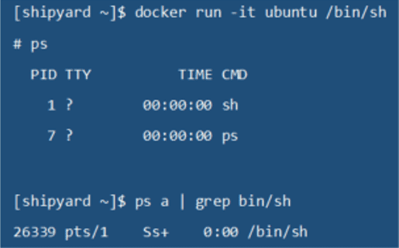

Linux namespaces essentially abstract a system resource such as process identifier (PID), or system mounts, and present them to the namespace as if they have their own isolated instance of the resource even though they exist on the host itself. The example below shows how a container views its processes versus those that exist on the system running the Docker-engine.

As you can see, I have started up an Ubuntu Linux container and listed out the running processes. Notice that the /bin/bash process is PID 1 within the container. The second ps command in the screenshot was run on the Docker-host, and the /bin/bash PID is 26339.

The Linux kernel provides six namespaces:

- Cgroup: View of a process's cgroups

- IPC: Isolate certain IPC resources

- Network: Provide isolation of the system resources associated with networking

- Mount: Provide isolation of the list of mount points seen by the processes in each namespace instance

- PID: Isolate the process ID number space, meaning that processes in different PID namespaces can have the same PID

- User: Isolate security-related identifiers and attributes, in particular, user IDs and group IDs

- UTS: Provide isolation of two system identifiers: the hostname and the NIS domain name

These six namespaces present a container with all the resources necessary to function while keeping it isolated from the host and other containers. That being said, a container has its own dedicated NIC, mount points, processes, and users while, in fact, it is being presented these by the host system’s kernel.

Linux control groups (cgroups) “allow processes to be organized into hierarchical groups whose usage of various types of resources can then be limited and monitored.” Essentially, cgroups provide a mechanism to limit containers so that they do not consume host resources such as CPU and memory.

PCI DSS specific concerns with Docker

In the sections below, I have highlighted example PCI compliance implications for Docker. This is not meant to be an all-inclusive list for PCI, or any other compliance standard. The focus here is on configuration and vulnerability management, and networking and logging are also touched upon as well.

1.2 Build firewall and router configurations that restrict connections between untrusted networks and any system components in the cardholder data environment.

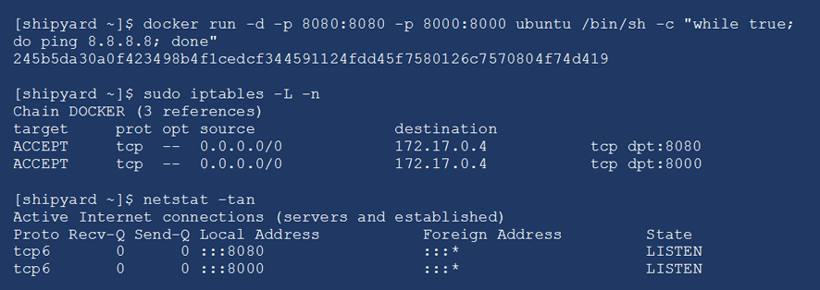

When you run a container and expose a network port - for example, to make a web server container accessible - the Docker daemon adds iptables rules, which make the ports available to the world. As you can see in the example below, I ran a container exposing ports TCP/8000 and TCP/8080. Looking at the iptables rules and netstat listeners on the host, those ports are open from any source address. This effectively makes the firewall not a firewall at all since it is hardly blocking anything.

PCI Controls

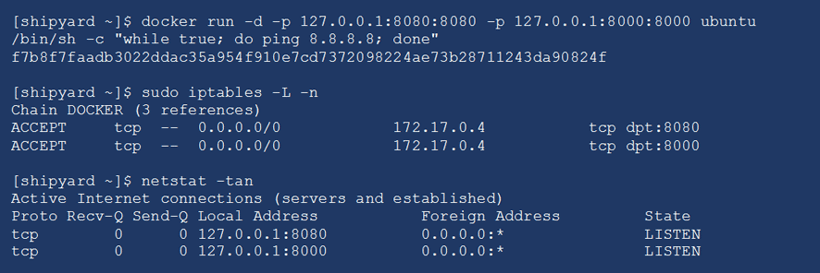

A better way to expose ports on a container is to use the following format:

“-p <ip_address>:<container_port>:<host_port>”

As seen in the example below, this still creates globally accessible iptables rules, but only causes the server to listen on the specific IP address.

For container services that need to have accessible outside of the host, I recommend using your own iptables rules and disabling the Docker daemon from implementing iptables rules using the “--iptables=false” flag. With this option enabled, even if a network port is exposed while launching a container, there are no iptables rules created.

2.2 Develop configuration standards for all system components. Assure that these standards address all known security vulnerabilities and are consistent with industry-accepted system hardening standards.

Host Hardening

If the Docker host itself is not sufficiently hardened, then they are vulnerable, regardless of how secure the containers running on the host are. Luckily, because Docker is becoming more and more widespread, there are published security hardening standards from CIS and NIST (see Sources and Links section). These hardening standards are critical to securing both the host and the Docker daemon.

PCI Controls

Create Docker host specific configuration standards that conform to the hardening benchmarks provided by CIS and NIST. As an even better step, some vulnerability scanners can audit a system (requires credentialed scan) against CIS benchmarks. Another option, though admittedly quite a bit more work, is to write InSpec tests which can audit your system against your configuration standards at any given time.

Trusted Registry

In the same vein as host hardening, Docker also presents another unique challenge when it comes to creating containers.

Docker maintains a public container registry called the Docker Hub. In this registry, major vendors such as Ubuntu, CentOS, Nginx, Apache, etc. create their own official container images, which can be pulled down by anybody. The Docker Hub also contains user-created images that are not verified by any major vendor, and thus could have security flaws or malware installed on them. Recently, Docker Hub enabled content trust, which allows the verification of the integrity and the publisher of containers. This can help prevent downloading tampered container images that may contain malware.

PCI Controls

Rather than simply pulling down a container - for example, Nginx - it is much more secure to build your own Nginx container from scratch. This allows much more insight and control over the packages and commands run when the container is built. Typically, this is done using what is called a Dockerfile. A Dockerfile is simply a set of instructions used to build a container image--it might include things like settings up users, installing packages, and running shell scripts.

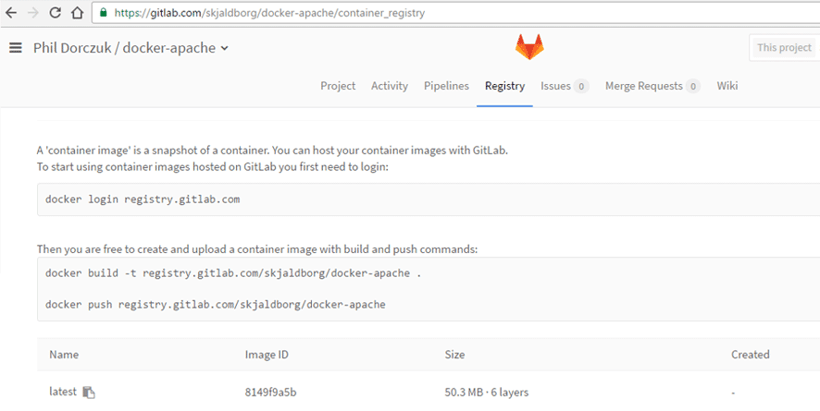

Once these images are created, a safe registry is necessary to store them. There are many options for this--for example, Docker Hub allows the storage of images in either public or private repositories, although the private repositories are not free. However, there are other cloud based repositories such as Google, Gitlab.com, and Quay.io.

Another option is hosting your own private container registry behind your firewall on an internal network. This is a nice option since it doesn’t require any further expense, and you have a lot more control over access to the registry.

In my example, I am using Gitlab.com, which is a great option, as it has a combines a version control system, a CI/CD pipeline, and container registry. This is a great solution because should you be using version control on your Dockerfiles, Gitlab can fill a variety of functions in one UI. In my example, I am using a Gitlab.com repository to act as my container registry.

2.2.1 Implement only one primary function per server to prevent functions that require different security levels from co-existing on the same server. (For example, web servers, database servers, and DNS should be implemented on separate servers.)

This is perhaps the trickiest PCI requirement to reconcile with containers. It goes without saying that a Docker host should only be a Docker host, but the containers themselves are less clear. All containers running on a host share the same kernel; as such, is there enough separation between containers and the host? Does running an Apache container and a MySQL container on the same Docker host violate this requirement? Or can each container be considered as its own server?

As mentioned earlier, Docker was designed with application portability in mind. While containers by nature provide some level of isolation from the host system or other containers, they are not nearly as good as virtual machines at performing this isolation, as containers share the host’s kernel. The Docker daemon requires root access to function properly. This requires any user running Docker commands to either be part of the Docker group, or use sudo to gain root privileges. In a default Docker configuration, any user who can run a Docker container can potentially get full root access on the host -- a user who is root within a container is also root on the host itself. This is illustrated in the following examples.

Container Breakout Examples

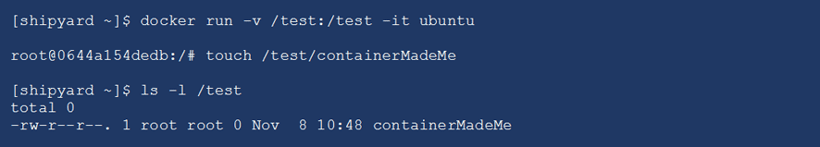

In this first example, a container is run mounting a host filesystem. This allows the container to directly interact with the host file system and potentially introduce malware on the host or delete critical files. In the example below, I launch a container with a volume pointing to /test on the host, and then, from within the container, create a file. Afterwards, when looking at the folder on those, you can see that the file exists on the host with root privileges.

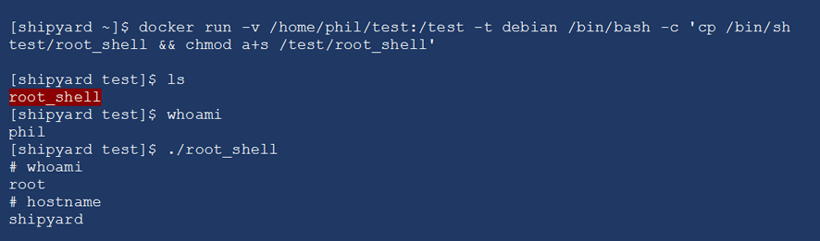

I can also use a very similar process to get a root shell on the host. In this example, I copy the contents of the container’s shell onto my host using a SUID permission. The SUID flag (shown by the “s” character in the file permission) allows a user to execute a file using the file’s owner rather than the user running the command. In this case the “root_shell” file is owned by root so when I execute it, I end up in a root shell on my host.

PCI Controls

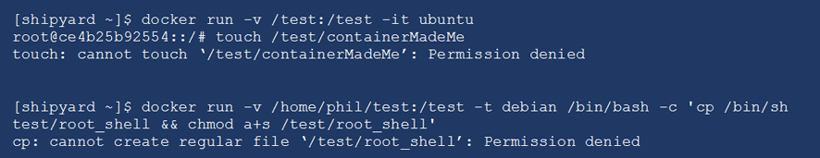

Since Docker version 1.10, user namespace support is now supported, which can be used to mitigate both examples above. As mentioned briefly, user namespaces give Docker the ability to map new UID and GID to containers, thus removing the danger shown above where any root user within a container is effectively a root user on the host. This feature is not enabled by default so the daemon (and possibly kernel itself) will need to be configured for user namespace support. For more information on enabling and configuring user namespaces on the Docker daemon, see the official docs here.

In the screenshots below, the same tests are run as the previous examples; however, in these cases, user namespaces are enabled. In both examples, the tests fail.

Perhaps the most effective way to isolate containers from the host and each other is to craft SELinux and AppArmor policies. If you are not familiar with SELinux and AppArmor, they are Mandatory Access Control (MAC) security systems built into Linux. In SELinux and AppArmor, a central policy is defined, which determines which actions or files users and programs may access. For example, with SELinux, those directories that containers can write to can be specifically labelled. Even if a container is run with a volume mounted, it won’t be able to write to the directory unless an SELinux policy allows it.

The intricacies of SELinux and AppArmor could fill an entire book by themselves, and as such, we won’t delve further into them. For more information, see the Sources and Links section for some examples on how to configure Docker policies.

In conclusion, containers in a PCI DSS environment should only be running one process or application each; by example, a Dockerized web application should, at a minimum, have a separate web server and database container. Similarly, a Docker host should only be used as a Docker host. Though containers are less isolated than virtual machines by nature, using process isolation coupled with user namespaces, and a robust SELinux/AppArmor profile should provide enough isolation to comply with PCI requirement 2.2.

11.2.1 Perform quarterly internal vulnerability scans.

If containers are used in a cardholder data environment, they are subject to all the same PCI controls as a server would be - this includes vulnerability scanning on the containers. This is extra important for containers, since all the containers running on a host are sharing the host’s kernel, meaning that if containers are not secure or have ineffective permissions, they could potentially exploit kernel level vulnerabilities on the host and affect multiple containers.

PCI Controls

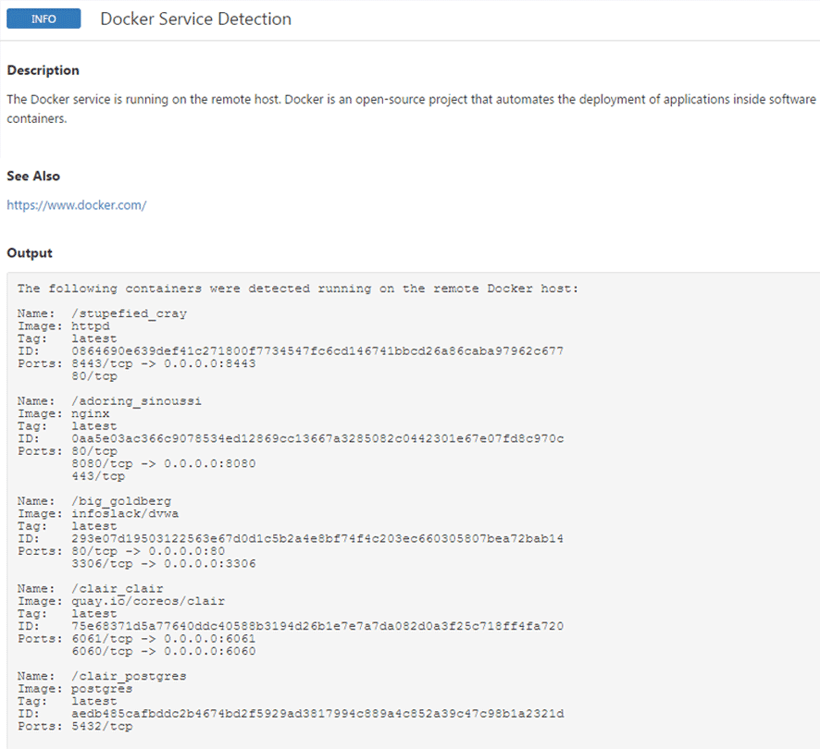

Most of the major commercial vulnerability scanning tools include support for scanning Docker containers. An example would be Nessus, which includes a plugin to detect Docker installations. If it does find Docker installed, Nessus will enumerate and scan the containers on that host. Other commercial container scanning tools include Twistlock and Docker Cloud. There are also several open source and free static container scanners such as Clair and OpenSCAP.

Below is an example output of Nessus scan on my Docker host:

10.1 Implement audit trails to link all access to system components to each individual user.

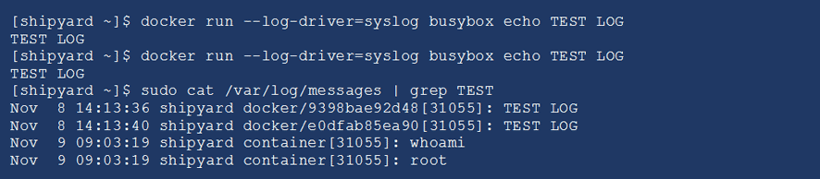

Docker does pose a challenge when it comes to logging - containers are inherently ephemeral, which presents a problem. Per Datadog research, the average container lifetime is just 2.5 days. Containers do not persist any data by themselves; if you launch a container without mounting some sort of persistent data volume, as soon as that container is stopped, all the data within it goes away. Because of this, to maintain the PCI controls for log retention, you must offload container logs to a persistent storage location. Thankfully, Docker has powerful built-in logging capabilities. By default, anything that goes to the container’s STDOUT or error will be logged, meaning that any commands run through the Docker command line or interactively through an “exec -it” will be logged.

PCI requires that certain events are captured in requirement 10.2. In the case of a Docker container, a few of these do not apply. For example, failed logins to a container rarely occur. The best practice when using Docker is to NOT install an SSH server for a container, and instead to use the “exec -it,” which gives a pseudo TTY shell. Additionally, there are typically not syslog daemons running in containers – remember, the Docker paradigm is one process per container - so daemon restarts would not be seen. However, the level of logging present inherently within Docker can often meet the PCI requirements -that being said, this applies only to the container itself. If you are running a database or web server in the container, you may need a separate logging mechanism for those.

In the example below, I am running using the local syslog driver to capture container output. You can see that it is capturing test messages that I am running in different containers. These logs could then be sent to any central logging server where they could be configured for threshold alerting and retention.

Conclusion

Docker presents a challenge for both its users and assessors when it comes to PCI DSS compliance, as the requirements did not consider containers. Unfortunately, it can take some mental gymnastics to figure out how the PCI requirements do apply to containers and how containerized applications can meet the requirements. The topics presented in this article are certainly not exhaustive, but contain what seem to be the major hurdles in achieving a PCI-compliant container environment.

And while there are hurdles to be jumped and special attention that is needed when using containers in a cardholder data environment, there are no insurmountable obstacles to achieving PCI compliance.

SOURCES AND LINKS

Docker General Documents

https://docs.docker.com/engine/reference/commandline/dockerd/

Docker Namespaces

https://coderwall.com/p/s_ydlq/using-user-namespaces-on-docker

https://success.docker.com/Datacenter/Apply/Introduction_to_User_Namespaces_in_Docker_Engine

Docker SELinux

https://projectatomic.io/blog/2016/03/dwalsh_selinux_containers/

Docker and iptables

https://fralef.me/docker-and-iptables.html

Docker Hardening Standards

https://benchmarks.cisecurity.org/tools2/docker/CIS_Docker_1.12.0_Benchmark_v1.0.0.pdf

https://web.nvd.nist.gov/view/ncp/repository/checklistDetail?id=655

GitLab Container Registry

https://docs.gitlab.com/ce/administration/container_registry.html

Free Container Vulnerability Scanners

https://github.com/coreos/clair

https://github.com/OpenSCAP/container-compliance

About Phil Dorczuk

Phil Dorczuk is a Senior Associate with Schellman. Prior to joining Schellman, LLC in 2013, Phil worked as a PCI DSS auditor with Coalfire Systems and a consultant at GTRI. At Coalfire, Phil specialized in PCI DSS audits and gap assessments and at GTRI specialized in Cisco network equipment installation and configuration.